- Core

- IBAC

- Network Policy

- AWS

- EKS

AWS releases built-in network policy enforcement for AWS EKS

Discover the latest advancement in AWS EKS as AWS unveils built-in support for enforcing Kubernetes network policies using the native VPC CNI, and simplify the implementation challenges with the open-source Otterize intents operator and network mapper.

Written By

David G. Simmons & Ori ShoshanPublished Date

Aug 31 2023Read Time

4 minutes

AWS has announced built-in support for enforcing Kubernetes network policies for the native VPC CNI. This was one of the most requested features on the AWS containers roadmap. By default, Kubernetes allows all pods to communicate with no restrictions. With Kubernetes network policies, you can restrict traffic, and achieve zero-trust between workloads in your cluster.

Before, you had to deploy a third-party network policy controller, or replace the CNI completely, which can be very complicated for an existing cluster. You probably do want to use the VPC CNI so that your Kubernetes pods can communicate with services and other workloads in the VPC network directly.

However, network policies are difficult to implement.

It’s an all-or-nothing endeavor - allowing one client will block other clients, unless you allow them.

You place policies on servers, but really only clients know who they are supposed to connect to.

It’s difficult to keep labels for many different services in sync so that the network policies allow the correct services, as ownership for different services can be split between different teams in the organization, but you must get it right on the first go or access will be blocked.

Having many different network policies on a single node can have performance implications, as it results in many different rules that must be evaluated for each packet.

It’s so hard it might as well be impossible, to know ahead of time and based on analysis of the policies, whether applying a network policy will result in workloads being blocked.

Try out the open source Otterize intents operator and network mapper to solve these problems, as well as manage other kinds of access controls, such as Kafka ACLs, Istio authorization policies, and (coming soon!) AWS RDS PostgreSQL and AWS IAM policies:

1. Clear ownership: Declare ClientIntents in the same namespace as the client that your team is managing, instead of adding your client to network policies protecting a server owned by another team, in another namespace altogether, allowing each client to declare which servers it needs to call. The intents operator then aggregates client intents per server, and creates a single network policy on the server. This means that only one resource needs to change when one client changes.

apiVersion: k8s.otterize.com/v1alpha2

kind: ClientIntents

metadata:

name: client

spec:

service:

name: client

calls:

- name: server2. Automatically generate intents: Use the network mapper to autogenerate client intents based on existing traffic. The network mapper captures DNS traffic in your cluster and generates ClientIntents resources for each client, which you can then push to Git and deploy to your cluster.

> otterize network-mapper export

apiVersion: k8s.otterize.com/v1alpha2

kind: ClientIntents

metadata:

name: client

spec:

service:

name: client

calls:

- name: server

---

apiVersion: k8s.otterize.com/v1alpha2

kind: ClientIntents

# [...]

# and more! For all clients in your cluster, or all clients in a namespace.

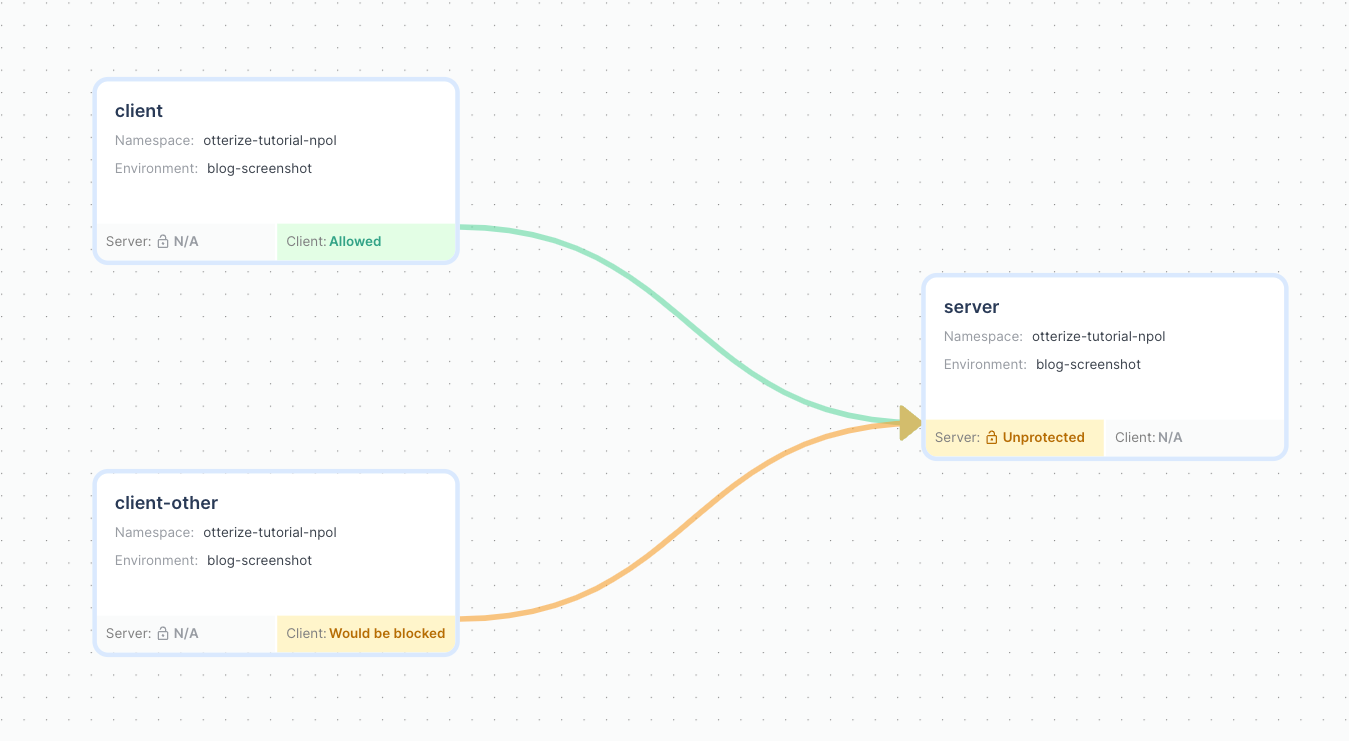

3. See what’s happening: Optionally, connect the intents operator and network mapper to Otterize Cloud, displaying a visual map of accesses in your cluster, and seeing which connections are allowed and which are blocked. This information is also available through an API for automation.

4. Do it gradually, not all-or-nothing: Enable shadow mode for the intents operator, which means it will not create network policies yet, but Otterize Cloud will be able to show you what would happen with the current intents and live traffic.

The green line indicates that intents are declared and access would be allowed if the server is protected. The yellow line indicates that access would be blocked if the server was protected, but is not blocked right now.

5. Enable enforcement when ready, service-by-service or for the entire cluster: When you’re ready to protect a single server, create a ProtectedService resource for that server, which will create a default-deny network policy for the service as well as allowing access from client which have ClientIntents. If you’re ready to protect your entire cluster, switch the intents operator to active mode, which will create network policies for all clients with ClientIntents.

apiVersion: k8s.otterize.com/v1alpha2

kind: ProtectedService

metadata:

name: server-protectedservice

spec:

name: server

Become one of the platform teams who have deployed this to staging in 15 minutes, and to production in days. There is zero configuration.

Deploy the intents operator and network mapper.

helm repo add otterize https://helm.otterize.com

helm repo update

helm install otterize otterize/otterize-kubernetes -n otterize-system --create-namespace

Install the CLI and autogenerate intents:

brew install otterize/otterize/otterize-cli$ otterize mapper export

apiVersion: k8s.otterize.com/v1alpha2

kind: ClientIntents

metadata:

name: client

namespace: otterize-tutorial-eks

spec:

service:

name: client

calls:

- name: server

And apply your intents:

$ otterize mapper export | kubectl apply -f - # or commit into your Helm chart for a real deployment

Want to see it in action? Check out a mini-tutorial that walks you through setting up an EKS cluster and trying out managing network policies with Otterize.

Like this article?

Sign up for newsletter updates

Resource Library

Read blogs by Otis, run self-paced labs that teach you how to use Otterize in your browser, or read mentions of Otterize in the media.

- Kubernetes

New year, new features

We have some exciting announcements for the new year! New features for both security and platform teams, usability improvements, performance improvements, and more! All of the features that have been introduced recently, in one digest.

- Kubernetes

First Person Platform E04 - Ian Evans on security as an enabler for financial institutions

The fourth episode of First Person Platform, a podcast: platform engineers and security practitioners nerd out with Ori Shoshan on access controls, Kubernetes, and platform engineering.