- Kubernetes

- IBAC

- Zero-trust

- IAM

- AWS

- EKS

- ACK

Scheduler vs. API Proxy: Balancing Kubernetes data-plane and control-plane for optimal zero-trust IAM security with Otterize

Discover how to automate zero-trust IAM security for EKS applications and AWS resources using AWS Controllers for Kubernetes (ACK) and Otterize.

Written By

Nic VermandéPublished Date

Apr 29 2024Read Time

22 minutes

In this Article

Ever since Kubernetes entered the tech scene as a portable, extensible, open source platform for managing containerized workloads and services, it has become the belle of the ball in the container orchestration world. Its capacity to scale and manage mission-critical workloads, along with setting the highest standards for observability and resiliency, has garnered it universal acclaim. But Kubernetes does more than merely manage workloads: it excels at extending its model to offer a robust API platform and seamlessly integrate application components as first class resources. For instance, the proliferation of operators over recent years has dramatically simplified the deployment of database clusters and streamlined data recovery procedures.

With its reconciliation model, Kubernetes opens up new possibilities for external services integrations, positioning it as a prime choice for enhancing or even replacing traditional Infrastructure-as-Code (IaC) workflows. Innovations like Crossplane and AWS Controllers for Kubernetes (ACK) showcase its unique ability in managing CRUD operations for external resources while maintaining a satisfying finite state. This evolving role has sparked engaging discussions among users: How best to leverage Kubernetes functions—should we focus more on its capabilities as a scheduler to run applications, or should its role as a proxy to cloud services be emphasized?

The challenge of Kubernetes as a proxy for external resources

Kubernetes isn’t just messing around with the basics of orchestration anymore; it’s really showing off, taking on the big world of managing external resources—think cloud services, databases, and the like. As we push it to go beyond its usual tasks, from handling internal workloads to dealing with external services, it’s like watching a juggler suddenly decide to toss chainsaws. Very entertaining, but quite scary at the same time, especially when thinking about how hard it becomes to keep everything safe and in check.

This expansion into broader operational contexts introduces a critical need to reassess and adapt our security paradigms. Traditional tools such as Role-Based Access Control (RBAC), while foundational, are increasingly perceived as inadequate when Kubernetes acts as a bridge to external resources. A more nuanced approach is required as we increase the variety of security mechanisms at stake to implement a zero-trust security model. Namely, Identity and Access Management (IAM) and workload identities look like the right abstractions to bring in.

In addition, each external service may come with its own IAM requirements, presenting a serious challenge at scale, even for seasoned platform or security engineers. This dynamic landscape requires a state of constant vigilance and adaptability, like Otis the otter, capable of solving a Rubik’s Cube blindfolded!

🚨 Wait! If you want to learn more about these topics, consider the following:

Take control: Our EKS IRSA tutorial provides a hands-on opportunity to explore these concepts further.

Stay ahead: Join our growing community Slack channel for the latest Otterize updates and use cases!

The security pitfalls: expanded boundaries and complex IAM patterns

As Kubernetes extends its reach beyond container orchestration and integrates directly with external services and cloud resources, it faces a new and critical security challenge: safeguarding access to these heterogeneous entities without increasing its own blast radius. Unlike the controlled internal environment, external services operate within distinct security domains, which are not adapted to Kubernetes. To protect this expanded landscape, a Zero-Trust security model becomes indispensable.

By zero-trust, we mean that the traditional security perimeter should be considered irrelevant and that the focus shifts to emphasizing the verification of identity, not intentions or presumed trustworthiness. Every access request is fully authenticated, authorized, and encrypted before granting access. Microsegmentation and least-privilege access principles are applied to minimize lateral movement. This approach is analogous to securing access to a highly sensitive data center: even trusted personnel must have their identity verified at every checkpoint. This presents a complex task, as traditional RBAC approaches often struggle to map granular permissions across these disparate domains. The dynamic nature of Kubernetes environments, coupled with evolving external services and their unique IAM requirements, further complicates this scenario, demanding ongoing vigilance and adaptability.

This situation is a recipe for trouble. Losing sight of the least privilege principle can easily occur if every change in services or code is not meticulously tracked and updated within the IAM policies. Infrastructure as Code (IaC) can help automate the setup and enforcement of these requirements, but it too can only go so far. As new code is deployed and services evolve, keeping up with compliance requirements becomes very difficult. Moreover, there's often no single source of truth, making it hard to ensure that every component is compliant and secure at all times.

Redefining Kubernetes security and IAM boundaries with on-demand, automated safeguards

Imagine if security automation could be maintained as easily as application code. Otterize offers just that—a platform for just-in-time IAM automation using declarative intents that represent developers' needs—all while keeping security experts in the loop. These intents are maintained alongside application code within version control systems, ensuring that every change is tracked and auditable.

This innovative approach streamlines the process of modeling security requirements, while also ensuring that production is always up-to-date with the latest application changes. Otterize treats security as code, integrating it into the development lifecycle. This allows for a more adapted response to changing application connections requirements, ensuring continuous compliance and security without manual intervention.

How does it work?

Otterize leverages a combination of Intent-Based Access Control (IBAC) and contextual mechanisms to automate policy generation. Client intents, expressed in high-level, human-readable language, define the desired security posture. This abstraction layer separates security requirements from the complexities of IAM policies and network security configurations (like Kubernetes network policies or Istio Authorization Policies). By focusing on the 'what' (desired security outcomes) rather than the 'how' (specific IAM and security configurations), Otterize simplifies the process, allowing security to scale effectively alongside the development of complex distributed systems.

This approach is enabled by several open-source initiatives:

The Credentials Operator provisions just-in-time credentials for workloads running on Kubernetes. These credentials are provided as various secrets, tailored to the specific service being targeted (e.g., Kubernetes secrets for mTLS certificates, database credentials for PostgreSQL, or IAM roles for AWS). In an EKS context, the Credentials Operator provisions AWS IAM roles and seamlessly binds them with the Kubernetes Service Account of the pod, aligning with IRSA (IAM Roles for Service Accounts) logic.

The Intents Operator simplifies identity and access management between services by enabling developers to declare the necessary calls for each service using client intents files. Intents are represented as

ClientIntentscustom resources in Kubernetes and the Intents Operator is capable of directly configuring network policies, Kafka ACLs, and acting on other enforcement points. In the case of AWS, developers can easily specify the AWS services they need to access, along with the required permissions. When Kubernetes workloads access external resources, they inherit these permissions via the attached service account. This approach provides flexible and scalable security management, as policy changes can be implemented through straightforward intent modifications, eliminating the need for manual updates to individual policies.

Let’s get into the weeds: application backend migration from MongoDB to DynamoDB

After the theory, it’s time to focus on a real-life use case that illustrates the problematics we’ve mentioned. Our scenario is a microservices application requiring a migration from a locally-managed MongoDB instance to DynamoDB, governed by external IAM entities. This presents multiple challenges, including the management of the DynamoDB lifecycle along with defining and maintaining proper IAM policies both inside and outside of Kubernetes. In this section, we’ll focus on how to solve these challenges by leveraging the Credentials and the Intents Operators.

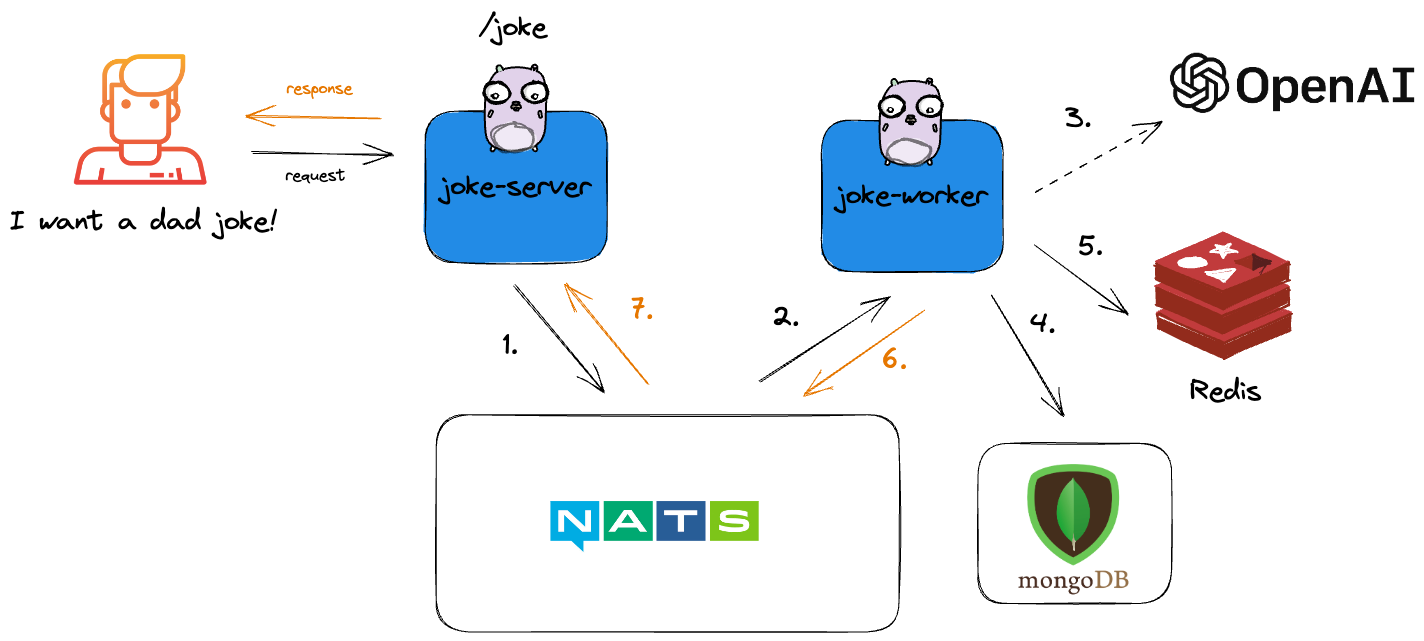

But first, let's introduce the microservices application that we're going to deploy, the dad jokes app, available at this repository, on the “aws” branch.

The application consists of the following components:

- joke-server: A web server that listens for incoming HTTP requests and returns a dad joke.

- joke-worker: A background worker that communicates with the OpenAI GPT-4 API, generates dad jokes, caches them in Redis, and stores them in MongoDB.

- Redis: A caching layer that temporarily stores generated dad jokes.

- MongoDB: A NoSQL database that permanently stores generated dad jokes.

- NATS: A messaging system that facilitates communication between the joke-server and joke-worker.

The application exposes an API on port 8080, which can be queried with HTTP GET requests. For example:

$ for i in {1..5}; do curl http://localhost:8080/joke; echo -e; doneJoke: Why don't eggs tell jokes? They'd crack each other up.

Joke: Why did the coffee file a police report? It got mugged!

Joke: Why do chicken coops only have two doors? Because if they had four, they'd be chicken sedans!

Joke: I only know 25 letters of the alphabet. I don't know y.

Joke: Why couldn't the bicycle stand up by itself? Because it was two-tired!

Super fun, right? 😅

If you’re more of the visual type, here’s a diagram that describes the application’s architecture:

Our goal as developers is now to migrate from MongoDB to AWS DynamoDB because it's a managed service and we don't have to worry—it's always going to be on (and will be taken care of by unicorns flying over rainbows—yes, in my world, unicorns can fly!).

As part of the migration, we're going to deploy the application to EKS. We'll see that Otterize can also simplify EKS building blocks that require IAM roles and policies, such as using EBS to host Redis persistent storage.

To help us manage CRUD operations on DynamoDB tables, we're going to deploy the ACK Controller for DynamoDB. Running as a Kubernetes Deployment, it will enable us to provision a DynamoDB table using a Table custom resource that contains our desired configuration, defined in YAML. The following section describes all the steps required to update and test our microservices application code. Let’s get started!

Step 0: Deploy the EKS cluster

To enable the functionalities we need, the following additions to the EKS cluster configuration are required:

- Enable OIDC for IRSA: This is required for secure authentication and authorization between your Kubernetes workloads and AWS services.

- Install the EBS CSI Driver as an Addon: This driver will enable the provisioning of persistent storage for applications by using Amazon Elastic Block Store (EBS). See Step 3 below.

The following configuration can be used as a starting point for deploying the cluster: https://docs.otterize.com/code-examples/aws-iam-eks/cluster-config.yaml

Step 1: Deploy the ACK Controller for DynamoDB

See https://gallery.ecr.aws/aws-controllers-k8s/dynamodb-controller to get the latest version.

Let’s use helm to install the controller, as described in the documentation:

Note: I’m using fish as my shell

set -x SERVICE "dynamodb"

set -x RELEASE_VERSION "1.2.10"

set -x ACK_SYSTEM_NAMESPACE "ack-system"

set -x AWS_REGION "eu-west-2"

helm install --create-namespace -n $ACK_SYSTEM_NAMESPACE \

ack-$SERVICE-controller oci://public.ecr.aws/aws-controllers-k8s/$SERVICE-chart \

--version=$RELEASE_VERSION \

--set=aws.region=$AWS_REGIONThe controller installs 5 new Custom Resource Definitions (CRD):

- adoptedresources.services.k8s.aws

- backups.dynamodb.services.k8s.aws

- fieldexports.services.k8s.aws

- globaltables.dynamodb.services.k8s.aws

- tables.dynamodb.services.k8s.aws

Step 2: Create a ClientIntents for ACK

A plethora of policies are required to enable the controller to authenticate and communicate with the AWS API. Instead of the convoluted process of manually provisioning IAM roles, permissions, and trust relationships, we'll leverage IBAC statements. This approach requires only a few YAML lines to define the client's intents.

AWS recommends attaching the AmazonDynamoDBFullAccess policy, which grants overly broad permissions for our application. Instead, we'll use the following self-explanatory permissions, which are a subset of the predefined policy:

ack-dynamodb-ci.yaml

apiVersion: k8s.otterize.com/v1alpha3

kind: ClientIntents

metadata:

name: ack-dynamodb

namespace: ack-system

spec:

service:

name: ack-dynamodb-controller-dynamodb-chart

calls:

- name: '*'

type: aws

awsActions:

- 'dynamodb:*'

To instruct the Credentials Operator to create the AWS IAM role and automate the trust and authorization processes, we need to label the ACK controller Pod. This Pod is controlled by a Kubernetes Deployment named ack-dynamodb-controller-dynamodb-chart.

We achieve this by adding the label to the Pod template field within the Deployment using the kubectl patch command. This ensures that every pod subsequently created by the Deployment controller will inherit the proper label.

kubectl patch deployment -n ack-system \

ack-dynamodb-controller-dynamodb-chart \

-p '{\"spec\": {\"template\":{\"metadata\":{\"labels\":{\"credentials-operator.otterize.com/create-aws-role\":\"true\"}}}} }'

This label triggers the Credentials Operator to perform the necessary operations in both AWS and Kubernetes.

Now, let’s deploy the ClientIntents manifest with kubectl to ensure the desired permissions are attached to the IAM role:

kubectl apply -n ack-system -f ack-dynamodb-ci.yaml

And voilà! With these few lines of configuration, the Credentials and Intents Operators automate the complex identity and access management workflow across multiple domains. The process translates ClientIntents into AWS IAM permission policies and attaches them to the newly created IAM role.

In AWS, the following IAM tasks have been automated:

- IAM Role Creation

- Trust Relationship Configuration: Establishes the trust with the Kubernetes cluster's OIDC identity provider, allowing the specified Kubernetes Service Account to assume the role using the issued JWT token.

- Permission Creation and Attachment: Creates and assigns the permissions defined in the ClientIntents to the IAM role.

In Kubernetes, the following IRSA tasks have been automated:

- Service Account Creation

- Service Account Annotation: Links the Service Account with the ARN of the AWS IAM role.

- Pod Configuration Update: References the Service Account within the ACK Pod configuration.

- Pod Annotation: Links the Pod with the ARN of the AWS IAM role.

The ACK Controller for DynamoDB is now able to manage the lifecycle of the table we need for our application. You can find all the custom resource manifests required by the application in the “aws” branch here in the custom-resources folder.

Next, let’s create the DynamoDB table by deploying the following Table manifest:

dynamodb.yaml

apiVersion: dynamodb.services.k8s.aws/v1alpha1

kind: Table

metadata:

name: jokestable

annotations:

services.k8s.aws/region: eu-west-2

spec:

tableName: JokesTable

billingMode: PAY_PER_REQUEST

tableClass: STANDARD

attributeDefinitions:

- attributeName: Joke

attributeType: S

keySchema:

- attributeName: Joke

keyType: HASH

This manifest instructs the ACK controller to create a DynamoDB table named “JokesTable” in the specified AWS region (eu-west-2). The table has a single attribute “Joke”, which stores dad jokes as strings.

Step 3: Modify the application code to use DynamoDB

You can find the detailed code in the joke package on the aws branch, where most of the database functions are defined. Here's an example of how to save a joke to the DynamoDB table:

func SaveJoke(ctx context.Context, svc *dynamodb.Client, joke Joke) error {

input := &dynamodb.PutItemInput{

TableName: aws.String("JokesTable"),

Item: map[string]types.AttributeValue{

"Joke": &types.AttributeValueMemberS{Value: string(joke)},

},

}

_, err := svc.PutItem(ctx, input)

if err != nil {

return fmt.Errorf("error saving joke to DynamoDB: %v", err)

}

return nil

}

Note that this implementation aligns with the table manifest configuration defined in the previous step.

Step 4: Create a ClientIntents for the joke worker

Now that the database migration from MongoDB to DynamoDB is complete, we need to explicitly grant the joke-worker permissions to interact with the table by creating a new ClientIntents manifest. As before, the Credentials Operator will assist with IAM role creation, and the Intents Operator will translate our ClientIntents into AWS permission policies.

joke-worker-ci.yaml

apiVersion: k8s.otterize.com/v1alpha3

kind: ClientIntents

metadata:

name: joke-worker

spec:

service:

name: joke-worker

calls:

- name: "*"

type: aws

awsActions:

- "dynamodb:*"

Then, we need to label the Pod template in the joke-worker manifest. This is done in the DevSpace configuration, which we’ll cover in the next step.

kubectl patch deployment -n ${NAMESPACE} joke-worker -p '{\"spec\": {\"template\":{\"metadata\":{\"labels\":{\"credentials-operator.otterize.com/create-aws-role\":\"true\"}}}} }'"

Step 5: Add the EBS CSI driver

The Redis service deployed with our application requires persistent storage in the form of a Kubernetes PersistentVolumeClaim (PVC) resource. We can achieve this by deploying the AWS EBS CSI driver. Within AWS, this requires the following actions, which will be automated by the Credentials and the Intents Operators.

- Create an IAM Role.

- Configure the appropriate permission policies.

- In the role, establish a trust relationship with the cluster's OIDC provider.

First, we deploy the EBS CSI driver using the following command:

eksctl create addon --name aws-ebs-csi-driver --cluster <cluster_name> --force

Traditionally, the command includes the --service-account-role-arn option referencing the IAM role. Since the Credentials Operator will create it for us, we can omit the option and delete the default role created by eksctl.

Step 6: Create a ClientIntents for the CSI controller

As before, the credentials and the Intents Operator will automate the necessary AWS and Kubernetes tasks, provided we label the EBS CSI controller Deployment. Additionally, we need to create the following ClientIntents manifest to encapsulate the AWS permissions required by the EBS CSI controller:

ebs-ci.yaml

apiVersion: k8s.otterize.com/v1alpha3

kind: ClientIntents

metadata:

name: ebs

spec:

service:

name: ebs-csi-controller

calls:

- name: "*"

type: aws

awsActions:

- "ec2:AttachVolume"

- "ec2:CreateSnapshot"

- "ec2:CreateTags"

- "ec2:CreateVolume"

- "ec2:DeleteSnapshot"

- "ec2:DeleteTags"

- "ec2:DeleteVolume"

- "ec2:DescribeAvailabilityZones"

- "ec2:DescribeInstances"

- "ec2:DescribeSnapshots"

- "ec2:DescribeTags"

- "ec2:DescribeVolumes"

- "ec2:DescribeVolumesModifications"

- "ec2:DetachVolume"

- "ec2:ModifyVolume"

Note: I've summarized the EC2 permissions for brevity. You can find a more granular overview of the required permissions here.

Next, we need to annotate the EBS CSI Deployment Pod template:

kubectl patch deployment -n kube-system \

ebs-csi-controller \

-p '{\"spec\": {\"template\":{\"metadata\":{\"labels\":{\"credentials-operator.otterize.com/create-aws-role":\"true\"}}}} }'

Then, we deploy the ClientIntents manifest:

kubectl apply -n kube-system -f ebs-ci.yaml

The EBS CSI driver is now ready, and we can create a new Kubernetes StorageClass for our Redis PVC:

gp3-storageclass.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: ebs-sc

provisioner: ebs.csi.aws.com

volumeBindingMode: WaitForFirstConsumer

parameters:

type: gp3

Finally, we apply the StorageClass using kubectl :

kubectl apply -f gp3-storageclass.yaml

Step 7: Deploy the dad jokes application

I personally like to use DevSpace (by Loft) to manage my Kubernetes applications' lifecycle. At a high level, it provides a Kubernetes experience similar to docker-compose, but without having to rely on a local Docker socket (and offering a lot more functionality). With a single command, I can build all application components, deploy them, and then tear them down. DevSpace also allows adding hooks for JSON patches, replacing upstream images with development versions, creating custom pipelines, and more. In my opinion, it's one of the best Swiss army knives for a Kubernetes application developer!

You can find the DevSpace configuration in the dad jokes app repo on the “aws” branch.

In summary, DevSpace is configured to:

- Build and deploy the container images for joke-worker and joke-server

- Deploy the Redis Operator

- Deploy the custom resources required by the application, including the DynamoDB table, the joke-worker ClientIntents, the Redis cache, and the Storage Class.

For a detailed guide on how to deploy the application with DevSpace, you can refer to the repo's README.

First, let’s build the application with the new code for DynamoDB. At the root of the repository:

cd deploy/devspace

devspace build

Then, we deploy the application:

devspace deploy

Step 8: Test the application

This is the exciting part! If all goes well - and since this is a blog and not a live demo, I can assure you it will work as planned.

To put the application through its paces, let's request a lot of jokes. After storing 20 jokes in the Redis cache and our database, we should start seeing random jokes served directly from Redis. Let's first generate 30 jokes, then inspect the Redis cache and the DynamoDB table. We should also observe a noticeable speed increase for the last 10 jokes.

for i in $(seq 39); curl http://localhost:8080/joke; echo -e "\n"; end

Let’s check the Redis cache from the Pod’s shell:

bash-5.1$ redis-cli

127.0.0.1:6379> SMEMBERS jokes

1) "My boss told me to have a good day... so I went home."

2) "Why don't eggs tell jokes? Because they might crack each other up!"

3) "Why don't skeletons ever go trick or treating? Because they have nobody to go with!"

[....]

20) "Why don't skeletons fight each other? Because they don't have the guts!"

20 jokes were effectively cached!

Finally let’s check the content of the DynamoDB table:

aws dynamodb scan --table-name JokesTable --region eu-west-2 | jq -r '.Items[].Joke.S'

Output truncated for brevity…

Why don't skeletons ever go trick or treating? Because they have nobody to go with!

Why can't your hand be 12 inches long? Because then it would be a foot!

My boss told me to have a good day... so I went home.

[...]

Why don't skeletons fight each other? Because they don't have the guts!

If two vegans get in a fight, is it still considered a beef?

How do you make holy water? You boil the hell out of it.

Oh absolutely, here you go: Did you hear about the cheeky balloon cunning into adulthood? He let out a little gas and thought he was no longer lighthearted. (That's a laugh floating in a lighter-air mood.)

Why did the coffee file a police report? It got mugged!

From the request screen recording, you should notice that the last 10 jokes were indeed returned faster from the cache! Mission accomplished!

TL;DR

Kubernetes is evolving beyond workload scheduling (Kubernetes as a scheduler) into managing external cloud resources (Kubernetes as an API proxy). This shift demands new security approaches, especially when Kubernetes interacts with diverse external services. Traditional RBAC is insufficient for the complex IAM patterns that emerge. This article explores how Zero-Trust with automated IAM can streamline secure access, leveraging Intent Based Access Control (IBAC) for policy generation as well as the Otterize OSS credentials and Intents Operator for end-to-end automation.

Conclusion

As Kubernetes continuously extends its reach, its evolution beyond simple container orchestration is fascinating. It's increasingly becoming a powerful API platform with deep integrations into external cloud services and off-the-shelf extensibility, unlocking new levels of functionality. But as this attack surface expands, the importance of secure access to these resources cannot be overstated. Traditional RBAC often is not adapted for the management of external services and introduces a higher degree of complexity, potentially leading to a weaker security posture.

This article explored how Zero-Trust security principles, or the art of removing security perimeters without impacting protection, and automated IAM generation tools like Otterize can streamline secure access for Kubernetes-managed external resources along with traditional Kubernetes workloads.

Utilizing Intent-Based Access Control (IBAC) provides clear and automated policy generation, ensuring role-based permissions stay tightly aligned with business needs. The migration of our sample application from MongoDB to DynamoDB within a Kubernetes environment offered the perfect example to highlight both the challenges and the value of a strong IAM strategy.

While the approach we've outlined with Otterize proved effective in providing secure access for this specific migration, we recognize that the journey for seamless and secure Kubernetes integration with external services is ongoing. The need for robust and automated IAM and network security solutions within Kubernetes environments is undeniable.

💬 Let’s talk about it!

Shape the future: We value your input! Share your thoughts on which migration tutorials (e.g., MongoDB to DynamoDB or NATS to SQS) would be most beneficial. Also, what other IAM automation scenarios are you interested in? Let us know.

Our community Slack channel is a great place to share your experiences, challenges, and any hiccups you encountered during your migrations—it’s also where you’ll be able to message me directly with any questions you may have. Let's learn from each other and discover solutions together!

In this Article

Like this article?

Sign up for newsletter updates

Resource Library

Read blogs by Otis, run self-paced labs that teach you how to use Otterize in your browser, or read mentions of Otterize in the media.

- Kubernetes

New year, new features

We have some exciting announcements for the new year! New features for both security and platform teams, usability improvements, performance improvements, and more! All of the features that have been introduced recently, in one digest.

- Kubernetes

First Person Platform E04 - Ian Evans on security as an enabler for financial institutions

The fourth episode of First Person Platform, a podcast: platform engineers and security practitioners nerd out with Ori Shoshan on access controls, Kubernetes, and platform engineering.