- IBAC

- Network Policy

Revisiting network policy management

Explore my journey of revisiting network policy management after three and a half years, reflecting on the challenges faced at Monzo and discovering the evolution towards a solution through intent-based access control for effective service authorization rules.

Written By

Jack KleemanPublished Date

Apr 24 2023Read Time

3 minutes

In this Article

It’s been three and a half years since I last worked with network policies at Monzo. At the time, I grew frustrated with the difficulty of specifying service to service authorization rules in a simple way. Everyone seemed to agree that network policies were difficult to manage when you had lots of services, but there didn’t seem to be much of a solution beyond ‘use Istio’, which is a pretty heavy handed solution. We stumbled into the realisation that specifying permissions as a property of the calling service significantly improved the situation, and that led to a very successful early implementation of network isolation.

Coming back to the problem years later, I can see there is a name now for what we did: intent based access control. Services specify a set of capabilities, like the ability to consume a Kafka topic or RPC a particular service; these capabilities are reviewed by other teams if necessary, stored in a trusted way, and then compiled into the underlying policies required for each system they refer to. I’ve been friends with the team at Otterize since 2021, when the CTO Ori reached out to me to chat about my work at Monzo, and now that they’re building their product in public, I wanted to give their approach to intents a try, and see whether I can do in a few hours what I previously hacked together in a few months.

Figuring out network paths in a cluster

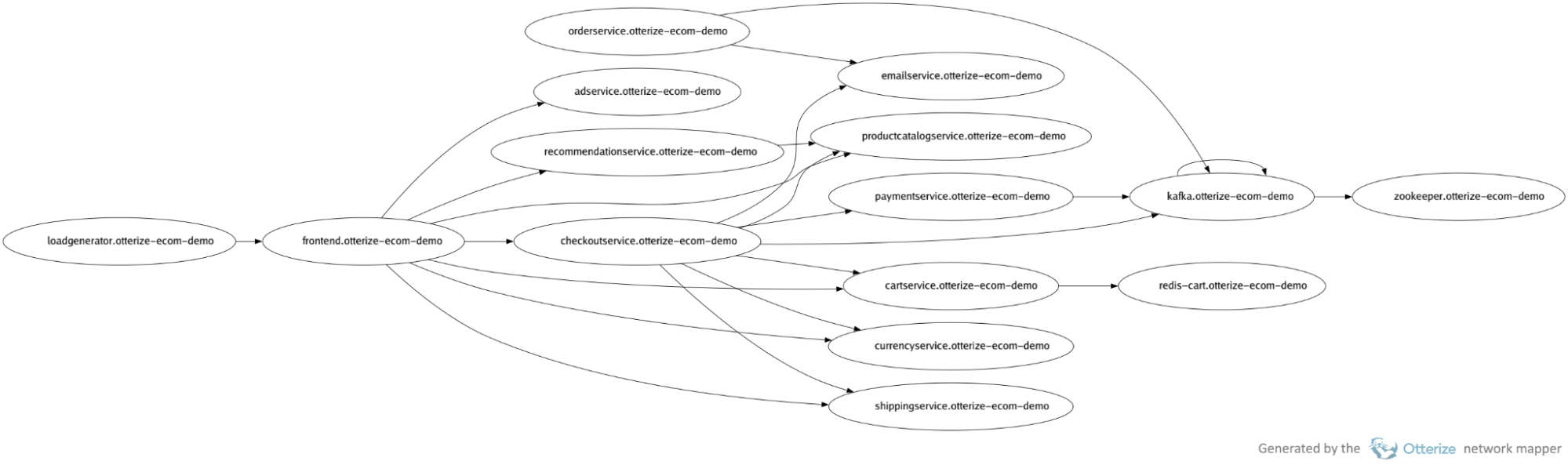

In my project, we had about 9000 ‘A calls B’ relations to discover. To build a first pass of those, we used code analysis (thanks to our very homogenous codebase where RPCs are easy to recognize) and we confirmed that analysis using Envoy metrics that reflected recent requests. Otterize seems to have gone with a similar approach with its optional network mapper component, which can sit on every node watching traffic to build a continuous view of traffic patterns without interfering with anything. Let’s try this on a demo cluster after a few minutes of watching traffic:

$ otterize network-mapper visualize -n otterize-ecom-demo -o map.png

Well that definitely saves us a lot of time. This tool can also directly output intents (which are a Kubernetes CRD; quite a bit more flexible than the filesystem approach I used):

apiVersion: k8s.otterize.com/v1alpha2

kind: ClientIntents

metadata:

name: cartservice

namespace: otterize-ecom-demo

spec:

service:

name: cartservice

calls:

- name: redis-cart

So we could just apply those intents in ‘shadow mode’ (ie, without enforcement) and observe whether Otterize warns us of any traffic that wasn’t covered by the rules we discovered. This two phase commit strategy via a shadow mode is critical to deploying authorization policies safely, and it’s really not trivial to build; every CNI has its own way of informing you of traffic that is dropped, and ingesting that information isn’t easy.

Visualising in the cloud

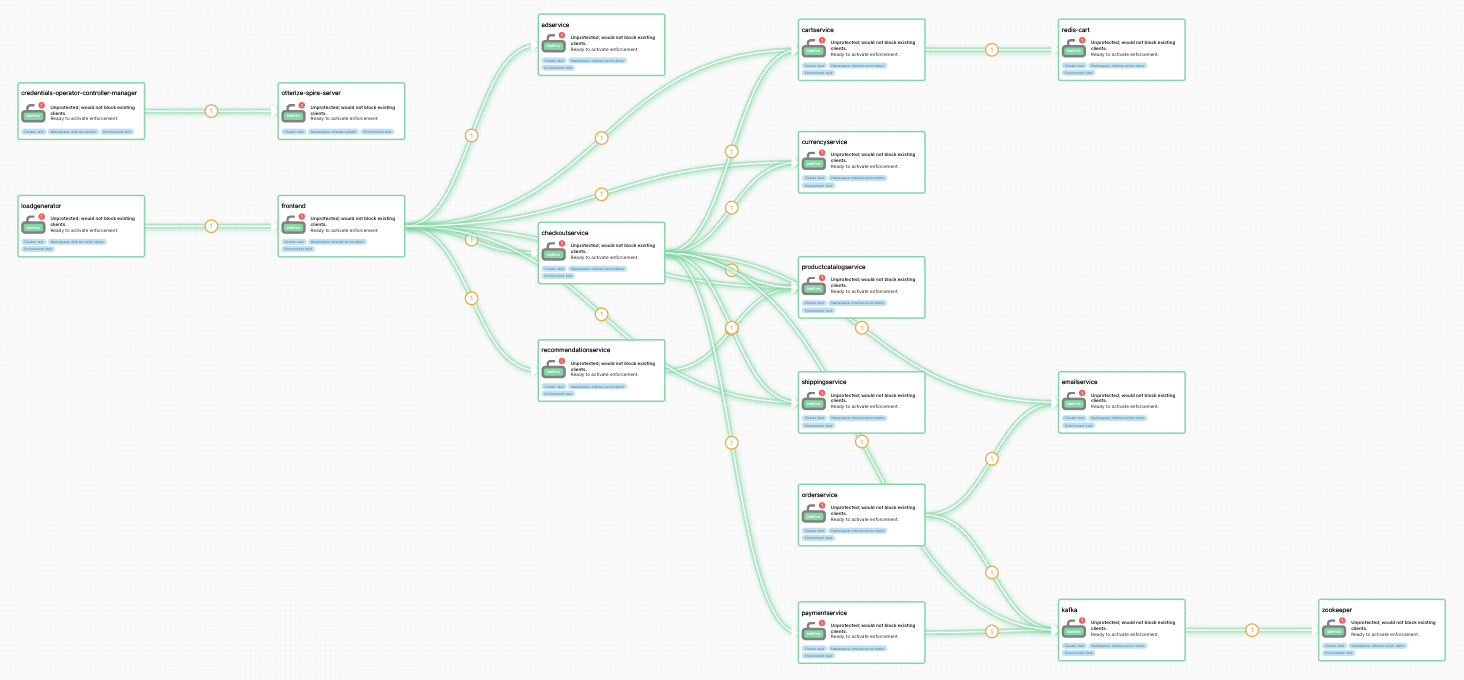

Producing dot files from generated intent files for input into a graph visualizer was as far as I ever got in my project, but Otterize has really taken visualisation to the next level with their cloud UI. By adjusting some Helm parameters I can give the control plane an Otterize Cloud token, and within a few seconds I can see this:

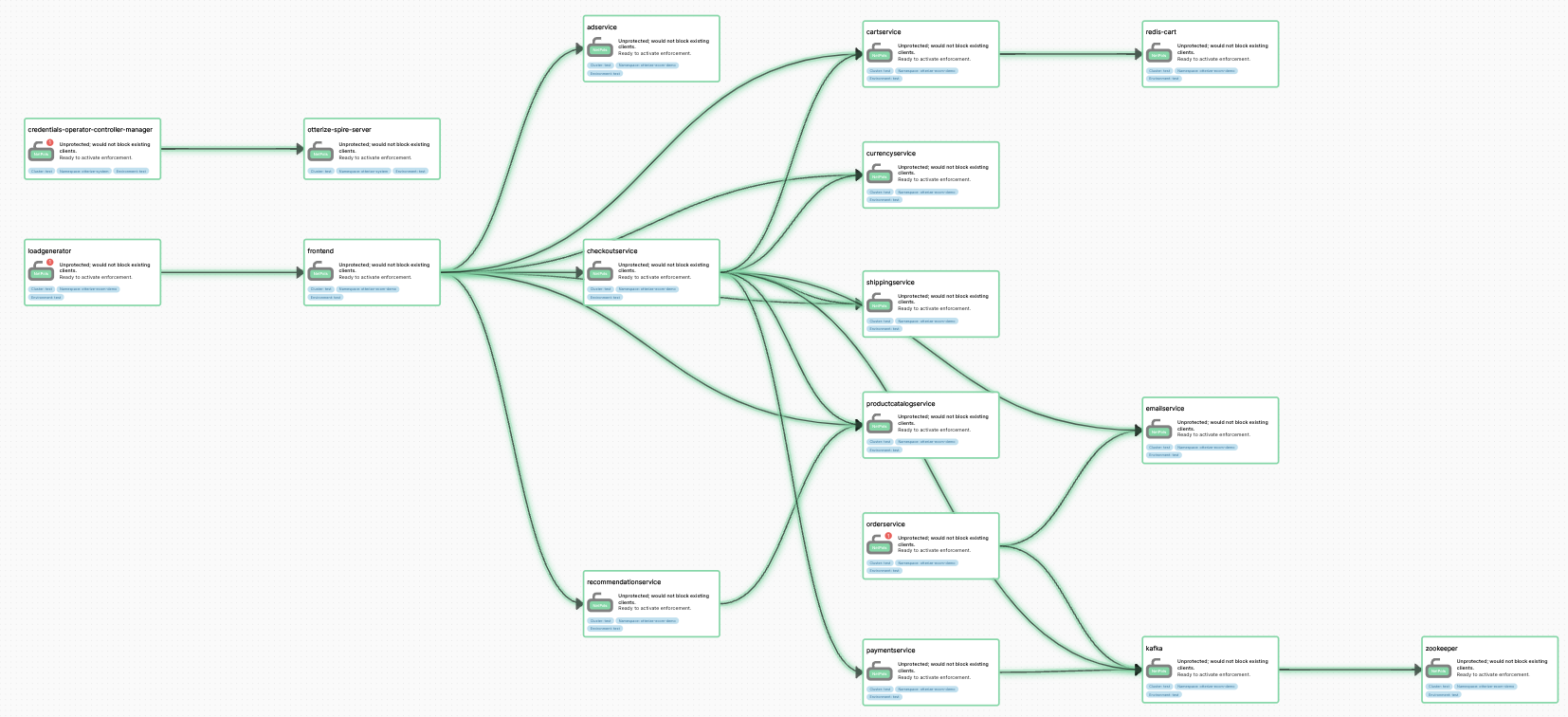

It’s really interesting to see a graph that’s informed by the actual network patterns, not just the intents, because it actually shows me what security holes exist; the yellow warnings indicate that these green paths are not specified with intents, so technically anything on the network can reach my services. I know I’m in shadow mode, so I can just apply all the generated intents safely. A few seconds later, the graph changes:

Now I can be confident that these discovered network paths are covered by the intents. At this stage in production I’m probably going to wait a few weeks to see if there are any rarely-travelled paths for which I don’t have intents; they would show up on the graph with a warning. So the network mapper is filling a similar role to the iptables metrics and logs that I previously used to alert on network flows that weren’t covered by existing rules. My approach really only worked for Calico, so it’s nice to have something more general.

Overall, I’m glad that these building blocks now exist, so that teams don’t have to reinvent the wheel when it comes to service to service authorization. At the time, it felt like no one else was really using network policies in production beyond toy projects. I hope that’s changing, and I’m glad we have Otterize to help!

In this Article

Like this article?

Sign up for newsletter updates

Resource Library

Read blogs by Otis, run self-paced labs that teach you how to use Otterize in your browser, or read mentions of Otterize in the media.

- Kubernetes

New year, new features

We have some exciting announcements for the new year! New features for both security and platform teams, usability improvements, performance improvements, and more! All of the features that have been introduced recently, in one digest.

- Kubernetes

First Person Platform E04 - Ian Evans on security as an enabler for financial institutions

The fourth episode of First Person Platform, a podcast: platform engineers and security practitioners nerd out with Ori Shoshan on access controls, Kubernetes, and platform engineering.